A few musings on software, biological systems, functional programming, and the rituals of writing to a database.

The billion natural shocks that flesh is heir to

Over the course of a lifetime, the entropy of a human body increases more or less monotonically. From mutations and parasites to scratches and scars, the body accumulates billions of tiny errors, and a lot of larger ones as well, through the processes of aging, infection, cancer, etc.

This increase in entropy isn't the inexorable, Second Law-type increase. Our bodies aren't closed systems, and decay isn't strictly physically inevitable. Single-cell organisms, for example, are effectively immortal.

No, the entropy that plagues our bodies is the more mundane variety that plagues all complex systems. It's just hard to maintain intricate complexity in the face of a chaotic, sometimes hostile world.

We do our best to correct for errors on the fly — by healing wounds, repairing or scrapping mutant DNA, and fighting off infections — but our bodies are just too big and complex to keep perfectly pristine.

So when we reproduce, we don't cleave our bodies down the middle and re-grow the other halves. Instead, we do a complete reboot — by creating a fresh double-helix of DNA and regrowing a body from scratch.[1] The new body of a child has much lower entropy — at least for a few years.

Incidentally, this is also the mechanism used by the 'immortal jellyfish' to achieve its feat of longevity. The immortal jellyfish engages in reboots that aren't reproductive (i.e., don't result in new jellyfish), but the principle is the same. Restarting from scratch solves the problem of entropy for complex biological systems.

Entropy and rebootable processes in software systems

Software systems are, perhaps surprisingly, a lot like biological systems. They're especially alike in their dizzying complexity, which makes both types of systems subject to the same pernicious effects of entropy.

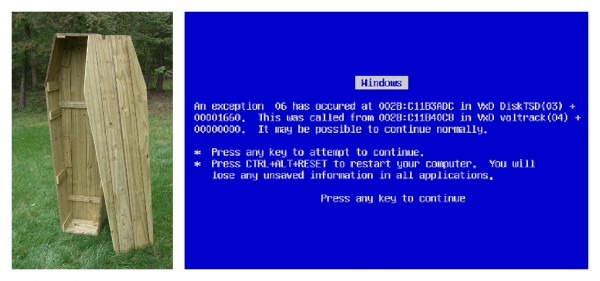

Software applications (Safari, Chrome, Eclipse) degrade over time as garbage objects build up, caches become inconsistent, and other small errors accumulate. Operating systems themselves (Windows, OS X, Linux), when they've been running for days or months, also accumulate entropy (bad state) and become less and less stable. And when a computer has been running the same OS for years, it collects a lot of junk (much of it 'foreign', i.e., viruses and other rogue code) and eventually becomes corrupted. In each case, the increase in entropy results in the death of the system.

And in software, as in life, we have a solution that takes us back to a pristine state: periodic reboots. We kill and re-launch an application, reboot the computer, or reinstall the OS.

No system has 100% uptime, and the only way to approach immortality is to periodically restart from scratch.

Systems that are designed to accommodate reboots are much more robust — e.g. stateless servers in a distributed system, or memory isolation in an OS (which allows applications to restart gracefully, without taking down the rest of the machine).

Functional programming

Functional programming is powerful in part because it's less susceptible to the effects of entropy. A stateless, side-effect-free function is a lot like a rebootable process. Every invocation of such a function is a from-scratch restart of a tiny little process, and without side effects, the machine goes back to its initial state when the function returns. Since there are no bits left lingering around (exposed to the effects of entropy), functional modules tend to suffer far fewer bugs than their stateful counterparts.

Even non-functional (imperative) programs can benefit from this way of understanding the effects of entropy. An imperative program doesn't have the same restart-from-scratch mechanics, but we can reduce the 'surface area' in which entropy accumulates by making a module less stateful. Some patterns:

- Immutable objects. If an object can't change, it can't suffer the effects of entropy.

- Don't store the same state in two different places. The second copy can easily get out of sync with the first copy; better to designate one location as canonical.

- Encapsulation. Controlling access to a module, and especially to actions that modify state, helps protect the module from external sources of entropy.

Caching, in this view, is an anti-pattern. Caches are cesspools for entropy. As Phil Karlton famously quipped, there are only two hard problems in computer science: cache invalidation and naming things. Caches, though often a necessary evil, should raise the hackles (or pique the nose) of any engineer who considers adding one to a system.

For rebootable processes, persistent data is sacred

I use the term sacred here in Durkheim's sense — that is to say, set apart and cared for with great respect, on account of its importance to the system.

In the case of life, DNA is the persistent data — the key piece of state that survives across reboots.[2] DNA is clearly treated as sacred by the organism. It is isolated to the nucleus, in the center of the cell, and it interacts with the rest of the cell through only a few narrowly proscribed channels.

In a software system, the state stored in a database (or filesystem) is what allow us to reboot a system without loss of continuity. And we treat these data as sacred bits. We set them apart and care for them with great respect, to keep them as pristine as humanly (and machine-ly) possible.

You could even say we have rituals for writing to a database:

- Validate the data

- Sanitize it

- Formulate the request to write the data in a precise, formalized manner

- Submit the request to an entity that specializes in maintaining the sacred bits

(A database is like a priest trained in the sacred practices of communing with data.)

Notice that we speak of clean and dirty data, sanitizing inputs, corrupted files, etc. It's no coincidence that these rituals evoke the moral emotion of purity. Purity — along with its cousin, sanctity — are concepts often evoked during rituals that pertain to sacred elements. Examples in the human world include removing your shoes before entering a temple, dressing in white for First Communion, and the entire Christian meme-complex of sin (as moral stain).

Both software and moral systems benefit from the purity/cleanliness metaphor because it allows us to reason about an abstract domain (data, human behavior) using a much more concrete and familiar domain (our bodies). We understand instinctively — and through early childhood learning — that it's important to keep our bodies clean, that we shouldn't eat dirty things, etc. And so we co-opt those instincts to help us reason about data and about human behavior.

Beyond software and biology

Where else can we take these ideas? They would seem to apply to any sufficiently complex system. Here are a few quick thoughts:

- Companies. If Charles Stross is to be believed, the half-life of a company is roughly 30 years. In most cases, corporate deaths are due to evaporating niches, but accumulated entropy certainly doesn't help. In the case of a company, entropy would include things like misallocated capital, internal fiefdoms, fragmented culture, or salaries that no longer match the value of the employees to whom they're being paid. (The problem of 'sticky wages' is so important, in fact, that it's one of the major justifications for inflation.) The effects of entropy might also be one of the reasons startups are so appealing. When you start a company from scratch, you won't have much infrastructure to support you, but you won't have to deal with organizational cruft either.

- Civilizations. The idea of civilizational cycles is fraught with the perils of speculation, but nonetheless very appealing. We speak of civilizational decline, even decay. If a company can accumulate entropy, perhaps a civilization can too. But are these concepts valid (in a causative/explanatory sense) or mere confabulation?

- Economies. Can an entire economy accumulate entropy? In the short term, the answer is surely yes. But unlikely many other complex systems, an economy is extremely resilient. It's a network comprising millions of intelligent nodes, and when one part of the network starts to decay, the neighboring nodes are adaptable enough to route around the damage. Perhaps this explains how other distributed networks (e.g. the internet) achieve such longevity, in spite of how complex they are.

___

Endnotes

[1] Note that sexual reproduction solves more than just the problem of entropy. It also helps us compete in evolutionary arms races, especially against parasites. See e.g. the Red Queen's Hypothesis.

[2] The cellular machinery in the egg — proteins, membranes, organelles, etc. — also persist across reboots, but they're more like hardware than data.

Melting Asphalt

Melting Asphalt