IQ tests measure what's known as g. This stands for general factor or general intelligence, but (depending on how you look at it) it's actually a rather specific type of intelligence: the ability to reason about abstract concepts. It's a facility with words, numbers, symbols, patterns, and formal systems. If X then Y. Complete the sequence. Which word fits best in this sentence. Etc.

This is known as decontextualized reasoning, so-called because context is irrelevant to the reasoning process. It doesn't matter what X and Y are — all that matters is their formal relationship. Engineers and lawyers are archetypical specialists in this type of reasoning. It can be characterized as third-person, disembodied, offline, abstract, formal, symbolic, representational, explicit, analytical, general, and theoretical. For those steeped in the Myers-Briggs typology, decontextualized reasoning corresponds to the N style.

This contrasts with contextualized reasoning, more commonly known as embodied reasoning. In embodied reasoning, details and context are paramount. Police work and acting are professions that rely on embodied reasoning. It can be characterized as first-person, embodied, online, situated, concrete, relational, empathic, enactive, specific, and practical. In the Myers-Briggs typology, this corresponds to the S style.

Philosophers (at least in the analytic tradition) are masters of decontextualized reasoning, so it should be no surprise that their very methods presuppose a decontextualized view of the world.

In no domain is this more apparent — or more dangerous — than the field of moral philosophy.

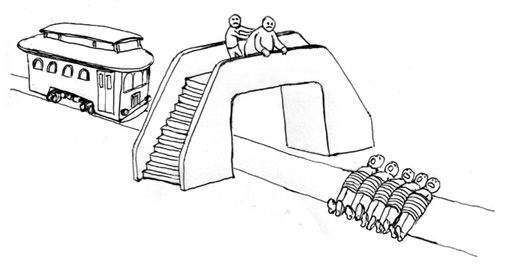

The Trolley Problem

Consider one of the classic thought experiments used to provoke our moral intuitions:

A trolley is hurtling down a track towards five people. You are on a bridge under which it will pass, and you can stop it by dropping a heavy weight in front of it. As it happens, there is a very fat man next to you. Your only way to stop the trolley is to push him over the bridge and onto the track, killing him to save five. Should you proceed?

(Well — should you?)

This thought experiment is known as the Trolley Problem. When philosophers present the Trolley Problem (or any of its variants or cousins), the goal is to elicit moral intuitions. These intuitions are then distilled into principles about what constitutes valid (or invalid) moral reasoning. And principles ultimately dictate which actions are (or aren't) morally defensible, i.e., whether they're right or wrong.

So — should you push the fat man onto the track to stop the trolley? Let's see what your response says about the kind of moral reasoner you are.

If you think that it's OK to kill the fat man to save the other 5 people, you've just engaged in consequentialist moral reasoning. You believe that the ends (saving 5 lives) justify the means (sacrificing 1 innocent). You might take this further and say that the only valid basis for moral reasoning is to maximize people's well-being (the greatest good for the greatest number), which would make you a utilitarian — a specific type of consequentialist. All well and good.

If you think that it's NOT OK to kill the fat man to save the other 5 people, you've probably engaged in a form of deontological moral reasoning. If you're not familiar with that term, don't be put off — it just means the opposite of consequential, namely, that the ends don't justify the means. Instead, the deontological position says that behavior is right or wrong on the basis of whether it conforms to particular rules. (The rules themselves are, of course, very much up for debate).

But this is textbook stuff. I didn't come here to regurgitate Wikipedia.

Instead, here's what I think is more interesting.

If you gave any answer to the Trolley Problem, then you're the kind of person who's eager to play the game of decontextualized reasoning. You think that intuitions elicited from simple scenarios are useful for extracting deeper, more general principles. You probably trust your own ability to reason abstractly, and you enjoy taking abstract principles and applying them to specific situations.

If instead you refused to give an answer to the Trolley Problem, then you're the kind of person who's skittish about decontextualized reasoning. You think questions about moral behavior are highly contextual (situation-specific), and you're reluctant to prescribe (or proscribe) behavior in underspecified scenarios. You feel uneasy making generalizations, preferring to reason about concrete situations (in all their concrete, nuanced detail), rather than about the abstract properties of those situations.

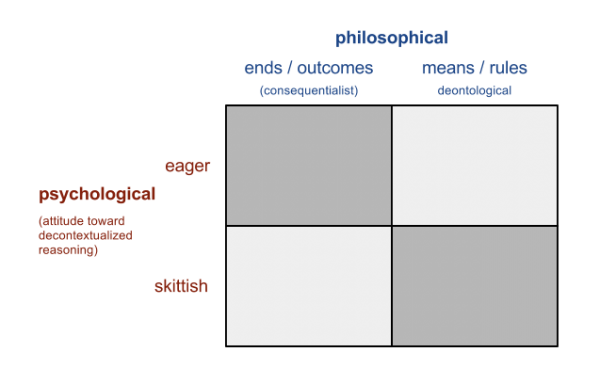

Two dimensions

I'm not trying to argue that one style is better or more appropriate than another (yet). I'm trying to show that there are two different dimensions that influence how we reason about moral dilemmas. On the one hand, there's the classic (philosophical) dimension of ends-based vs. means-based reasoning -- aka consequential vs. deontological. And on the other hand, there's the (psychological) dimension of one's attitude toward decontextualized reasoning, eager vs. skittish.

In practice, these two dimensions are correlated. Given the trolley problem, for example, those who are eager to reason decontextually will be more inclined to sacrifice the fat man, whereas those who are skittish about decontextualized reasoning will be less inclined to sacrifice him.

Or to put it more provocatively: The Aspier someone is, the more likely it is that he'll be an eager utilitarian. (You probably know people like this. Anyone who self-identifies as a 'utilitarian' probably has Aspie tendencies — very fluent with abstract concepts, and very eager to apply them to all aspects of life.)

Now it's time to put my cards on the table.

I'm someone who specializes in decontextualized reasoning, often to the exclusion of other forms of intelligence. The more practical something is, the more I snub my nose at it. I've studied computer science and philosophy — two of the more decontextualized disciplines — and I consider myself (at least a little bit) Aspie.

I'm also an unabashed consequentialist. I believe (following Scott Siskind) that utilitarianism is the only moral system that can achieve "reflective equilibrium," and that "all other moral systems are subtly but distinctly insane."

But I think there's more danger in decontextualized reasoning — and more value in deontological reasoning — than I've historically appreciated. The rest of this essay will discuss these critiques of eager utilitarianism.

Decontextualizing is dangerous

To explore the dangers of decontextualized reasoning, let's consider a few more scenarios. These are taken from Jonathan Haidt's research [1] into moral psychology. The question you should ask after each scenario is, "Did the participants do anything wrong?"

- Susan had a cat named Mickey for many years. Eventually Mickey died of a heart attack. Susan was very upset by the loss of Mickey, and she put a picture of him in the living room to remember him by. She isn't sure what to do with his body, so after some thought she decides to simply cook Mickey and eat him. She uses his body to make a large batch of chili that lasts her nearly a week.

- Julie and Mark are brother and sister. They are traveling together in France on summer vacation. One night they are staying alone in a cabin near the beach. They decide that it would be fun to make love. Julie was already taking birth control pills, but Mark uses a condom, too, just to be safe. They both enjoy making love, but they decide not to do it again. They keep that night as a secret between themselves.

- A man goes to the supermarket once a week and buys a chicken. But before cooking the chicken, he has sexual intercourse with it. Then he cooks it and eats it.

Each of these scenarios represents a "harmless taboo violation." As the stories make clear, there's no suffering involved (whether human or animal), and the taboo violations take place in private, to preempt accusations that innocent bystanders will be disgusted. The scenarios are thereby designed to provoke our moral intuitions specifically on matters that don't involve harm, care, fairness, equality, etc.

After probing subjects from a number of different national and socioeconomic groups, Haidt found that the WEIRDer a subject is, the more likely she is to say that the taboo violations are morally OK.

WEIRD here stands for Western, educated, industrialized, rich, and democratic, and I don't think it's a coincidence that these attributes (or at least the first three) make someone more likely to be trained in decontextualized reasoning. Western, educated, industrialized people are the population for whom IQ tests are written and for whom the scores are most relevant. We've grown up on math, logic games, the 5-paragraph essay — and we live in a world shaped by finance, media, engineering, law, etc.

For those of us who excel at decontextualized reasoning, it's easy to parse the words, decide if the scenario causes net harm or good, and pass judgment accordingly.

We treat these scenarios like SAT questions or puzzles on an IQ test. But the real world is a lot messier than a word problem.

Consider the last scenario. Essentially it's asking, "If nothing else goes wrong, is it OK for a man to have sex with a dead chicken?" But whether you buy the premise (nothing else going wrong) is exactly what's at stake, and it depends on your attitude toward decontextualized reasoning.

Someone who's skittish about decontextualized reasoning will try to contextualize the scenario (treat it like a scene in the real world). Such a person might argue as follows:

Wait wait wait. Let's stop and think about this for a minute — really think about it.

Let's imagine the kind of man who pleasures himself with a chicken carcass. Do you actually know anyone like that? Would you want someone like that in your community? Would you want to be friends with him? Would you let your children go over to play at his house? Would you ever marry such a man?

Having sex with a dead bird is so far beyond the pale (of what's considered normal and proper) that it's cause for real concern. If this is all we know about the guy, we can (statistically speaking) infer a higher likelihood of other deviant behaviors — ones that may not be as harmless.

Put simply: if he's willing to violate this taboo, there's no telling what else he's capable of.

It's true that the scenario, as written, doesn't involve any direct negative consequences. But it's also reasonable to view this as a story about a real human being — someone situated in a community, who needs to learn and follow the norms of that community in order to interact harmonious with others.

When you contextualize the man in the story — view him through a situated, embodied, relational lens — you won't be so charitable towards his actions.

And in many ways, that's a more honest approach to take when presented with a moral dilemma. Even WEIRDos like you and me would pass judgment on the chicken man, if we met or heard about him in real life. But we've been so heavily trained to use the third-person, decontexualized, legal perspective that we've lost touch with our own, native, first-person perspective. Our willingness to accept, unconditionally, the premises of a scenario — and to reason so stiffly and formally about it — is at least a little bit suspect.

Please note that I am not arguing for a society that punishes (or enacts laws that forbid) this kind of private, harmless behavior. A society that does so is making a grave mistake that will produce very bad outcomes for its people. I'm just pointing out that it's not crazy to be concerned, morally or otherwise, about this kind of private behavior, and that such concerns arise pretty naturally when you take the embodied/contextualized perspective.

Deontological heuristics

I wrote about heuristics a couple weeks ago in a post titled Code Smells, Ethical Smells. I noted that (1) the social world is intractably complex, and (2) humans are fallible and often overconfident (i.e., prone to hubris). In light of those facts, I argued that it's often safer to follow your 'nose' than to trust the results of your calculations. Smell here is a metaphor for intuition, which can detect lurking danger long before the rest of your brain realizes what's going on.

Learning to identify bad smells is a heuristic — a way of approximating the results of an otherwise intractable calculation.

The concept of calculations and heuristics applies nicely to the domain of moral reasoning. A consequentialist moral system, if you recall, cares only about the end results. Consequential reasoning, therefore, entails 'doing the full calculation' to see what the results of a particular action will be. Maybe the action will result in net good, maybe it will result in net harm — or maybe your calculation will go wrong somewhere. Actually — given the intractability of the social world and our propensity to hubris — it's a near certainty that you'll make an error (or worse, bring a bias) into your calculation.

This is where deontological reasoning steps in (to the rescue?). In a deontological moral system, an action is right or wrong on the basis of whether it conforms to particular rules of conduct. Our culture abounds with such rules: don't steal, don't kill people, treat others as they would like to be treated, etc. These rules are much easier to apply, and much less prone to errors and bias, than doing the full consequential calculation.

If you view these rules as emanating from a Cosmic Moral Order, where the rules themselves have value apart from their consequences, you are (subtly but distinctly) insane. But if you treat such rules as heuristics — as simpler, more effective ways of achieving good outcomes — then you are grounded, sanely, in what really matters.

Conclusion

Please humor me for a moment as I wax advocative. I'd like to make a brief plea for skittish utilitarianism.

Let's unpack the term. Utilitarianism is the idea that, deep down, all that truly matters are the ends (not the means), and the end we should strive for is maximizing the well-being of conscious creatures. But — this is the important part -- we need to be exceptionally wary in how we apply the utilitarian principle. Like a horse startling at the slightest whiff of danger, we humans should recoil when utilitarian conclusions offend our moral noses.

Decontextualized reasoning is a tremendously powerful tool. It gives us the ability to step back from our local, provincial, idiosyncratic intuitions, and generalize them into very deep, far-reaching principles. Decontextualized reasoning is what leads us to utilitarianism, and that's a Very Good Thing.

But if decontextualized reasoning excels at generating theories, it's terrible at applying them in concrete, messy, all-too-human situations. We're good at calculating (here in the educated, industrialized West), but we give our calculations more credence than they deserve, and take action based on simplified, often dehumanizing premises. We need to be aware of our weaknesses, and err on the side of caution until we're absolutely certain our calculations should overrule our intuitions.

In A Conflict of Visions, Thomas Sowell writes about two competing views of human nature. The unconstrained (or utopian) vision treats man and society as perfectible, and sees the past as 'getting in the way' of progress. The constrained (or tragic) vision treats man as inherently flawed, and distrusts his schemes for creating a perfect society. In the constrained vision, the traditions we've inherited from the past are invaluable heuristics that keep us from making hell with our good intentions.

We mustn't shirk from the utilitarian principle, but the constrained vision of human nature warns us to be skittish around it.

___

Endnotes

[1] Jonathan Haidt's research. Haidt's research program is descriptive rather than normative. He wants to understand moral reasoning as it's practiced 'in the wild', rather than promote a particular brand of moral reasoning. Nevertheless, the scenarios provide good talking points.

I've cited some of my sources inline, but credit for a lot of the ideas in this essay also goes to:

- Scott Siskind's witty, wonderful, and, authoritative FAQ on consequentialism. [Update 2017/11/21: original link expired; now linking to the Internet Archive.]

- Lee Corbin and Eric Lin, for interesting discussions and thought experiments

- Jonathan Haidt (The Righteous Mind, The Happiness Hypothesis, What Make People Vote Republican).

- Iain McGilchrist (The Divided Brain and the Search for Meaning)

- Sam Harris (The Moral Landscape)

Trolley Problem illustration courtesy of Advocatus Atheist.

Melting Asphalt

Melting Asphalt