(Originally published at Ribbonfarm.)

To scandalize a member of the educated West, open any book on European table manners from the middle of the second millennium:

"Some people gnaw a bone and then put it back in the dish. This is a serious offense." — Tannhäuser, 13th century.

"Don't blow your nose with the same hand that you use to hold the meat." — S'ensuivent les contenances de la table, 15th century.

"If you can't swallow a piece of food, turn around discreetly and throw it somewhere." — Erasmus of Rotterdam, De civilitate morum puerilium, 1530.

To the modern ear (and stomach), the behaviors discussed here are crude. We're disgusted not only by what these authors advocate, but also by what they feel compelled to advocate against. The advice not to blow one's nose with the meat-holding hand, for example, implies a culture where hands do serve both of these purposes. Just not the same hand. Ideally.

These were instructions aimed at the rich nobility. Among serfs out in the villages, standards were even less refined.

To get from medieval barbarism to today's standard was an exercise in civilization — the slow settling of our species into domesticated patterns of behavior. It's a progression meticulously documented by Norbert Elias in The Civilizing Process. Owing in large part to the centripetal forces of absolutism (culminating at the court of Louis XIV), manners, and the sensibilities to go with them, were first cultivated, then standardized and distributed throughout Europe.

But the civilizing process isn't just for people.

UX is etiquette for computers

embarrass, verb. To hamper or impede (a person, movement, or action) [archaic].

In 1968 Doug Englebart gave a public demo of an information management system, NLS, along with his new invention, the mouse. Even from our vantage in 2013, the achievement is impressive, but at the same time it's clumsy. The demo is frequently embarrassed by actions that have since become second nature to us: moving the cursor around, selecting text, copying and pasting.

It's not that the mouse itself was faulty, nor Englebart's skill with it. It was the software. In 1968, interfaces just didn't know how to make graceful use of a mouse.

Like medieval table manners, early UIs were, by today's standards, uncivilized. We could compare the Palm Pilot to the iPhone or Windows 3.1 to Vista, but the improvements in UX itself are clearest on the web. Here our progress stems not from better hardware, but from much simpler improvements — in layout, typography, color choices, and information architecture. It's not that we weren't physically capable of a higher standard in 1997; we just didn't know better.

A focus on appearance is just one of the ways UX is like etiquette. Both are the study and practice of optimal interactions. In etiquette we study the interactions among humans; in UX, between humans and computers. (HHI and HCI.) In both domains we pursue physical grace — "smooth," "frictionless" interactions — and try to avoid embarrassment. In both domains there's a focus on anticipating others' needs, putting them at ease, not getting in the way, etc.

Of course not all concerns are utilitarian. Both etiquette and UX are part function, part fashion. As a practitioner you need to be perceptive and helpful, yes, but to really distinguish yourself, you also need great taste and a good pulse on the zeitgeist. A designer should know if 'we' are doing flat or skeuomorphic design 'these days,' just as a diner should know if he should be tucking his napkin into his shirt or holding it on his lap. And in both domains, it's often better to follow an arbitrary convention than to try something new and different, however improved it might be.

As software becomes increasingly complex and entangled in our lives, we begin to treat it more and more like an interaction partner. Losing patience with software is a common sentiment, but we also feel comfort, gratitude, or suspicion. Clifford Nass and Byron Reeves studied some of these tendencies formally, in the lab, where they took classic social psychology experiments but replaced one of the interactants with a computer. What they found is that humans exhibit a range of social emotions and attitudes toward computers, including cooperation and even politeness. It seems that we're wired to treat computers as people.

Persons and interfaces

The concept of a person is arguably the most important interface ever developed.

In computer science, an interface is the exposed 'surface area' of a system, presented to the outside world in order to mediate between inside and outside. The point of an (idealized, abstract) interface is to hide the (messy, concrete) implementation — the reality on top of which the interface is constructed.

A person (as such) is a social fiction: an abstraction specifying the contract for an idealized interaction partner. Most of our institutions, even whole civilizations, are built to this interface — but fundamentally we are human beings, i.e., mere creatures. Some of us implement the person interface, but many of us (such as infants or the profoundly psychotic) don't. Even the most ironclad person among us will find herself the occasional subject of an outburst or breakdown that reveals what a leaky abstraction her personhood really is. The reality, as Mike Travers recently argued, is that each of us is an inconsistent mess — a "disorderly riot" of competing factions, just barely holding it all together.

Etiquette is critical to the person interface. Wearing clothes in public, excreting only in designated areas, saying please and thank you, apologizing for misbehavior, and generally curbing violent impulses — all of these are essential to the contract. As Erving Goffman puts it in Interaction Ritual: if you want to be treated like a person (given the proper deference), you must carry yourself as a person (with the proper demeanor). And the contrapositive: if you don't behave properly, society won't treat you like a person. Only those who expose proper behaviors — and, just as importantly, hide improper ones — are valid implementations of the person interface.

'Person,' by the way, has a neat etymology. It comes from the Latin persona, referring to a mask worn on stage. Per (through) + sona (sound) — the thing through which the sound (voice) traveled.

Now if etiquette civilizes human beings, then the discipline of UX civilizes technology. Both solve the problem of taking a messy, complicated system, prone by its nature to 'bad' behavior, and coaxing it toward behaviors more suitable to social interaction. Both succeed by exposing intelligible, acceptable behaviors while, critically, hiding most of the others.

In (Western) etiquette, one implementation detail is particularly important to hide: the fact that we are animals, tubes of meat with little monkey-minds. Thus our shame around body parts, fluids, dirt, odors, and wild emotions.

In UX, we try to hide the fact that our interfaces are built on top of machines — hunks of metal coursing with electricity, executing strict logic in the face of physical resource constraints. We strive to make our systems fault-tolerant, or at least to fail gracefully, though it goes against the machine's boolean grain. It's shameful for an app to freeze up, even for a second, or to show the user a cryptic error message. The blue screen of death is an HCI faux pas roughly equivalent to vomiting on the dinner table: it halts the proceedings and reminds us all-too-graphically what's gone wrong in the bowels of the system.

Apple makes something of a religion about hiding its implementation details. Its hardware is notoriously encapsulated. The iPhone won't deign to expose even a screw; it's as close to seamless as manufacturing technology will allow.

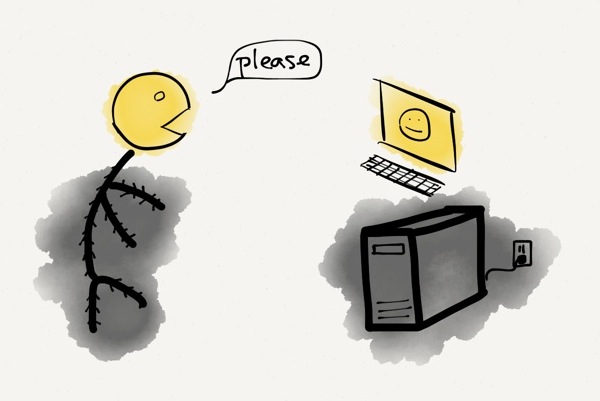

Thus the civilizing process takes in raw, wild ingredients and polishes them until they're suitable for bringing home to grandma. A human with a computer is an animal pawing at a machine, but at the interface boundary we both put up our masks and try our best to act like people.

The morality of design

Sure you can evaluate an interface by how 'usable' it is, but usability is such a bland, toothless concept. Alan Cooper — UX pioneer and author of About Face — argues that we should treat our interfaces like people and do what we do best: moralize about their behavior.

In other words, we should praise a good interface for being well-mannered — polite, considerate, even gracious — and condemn a bad interface for being rude.

This is about more than just saying "Please" and "Thank you" in our dialogs and status messages. A piece of software can be rude, says Cooper, if it "is stingy with information, obscures its process, forces the user to hunt for common functions, [or] is quick to blame the user for its own failings."

In both etiquette and UX, good behavior should be mostly invisible. Occasionally an interface will go above and beyond, and become salient for its exceptional manners (which we'll see in a minute), but mostly we become aware of our software when it fails to meet our standards for good conduct. And just like humans, interfaces can be rude for a whole spectrum of reasons.

When an interface choice doesn't just waste a user's time or patience, but actually causes material harm, especially in a fraudulent manner, we should feel justified in calling it evil, or at least a dark pattern. Other design choices — like sign-up walls or opt-out newsletter subscriptions — may be rude and self-serving, but they fall well shy of 'evil.'

Yet other choices are awkward, but forgivable. Every product team wants its app to auto-save, auto-update, support unlimited undo, animate all transitions, and be fully WYSIWYG — but the time and engineering effort required to build these things are often prohibitive. These apps are like the host of a dinner party who's doing the best he can without a separate set of fine china, cloth napkins, and fish-forks. To maintain the absolute highest standards is always costly — one of the reasons manners are an honest signal of wealth.

Finally, there are plenty of cases where the designer is simply oblivious to his or her bad designs. Like the courtly aristocrats of the 17th century (according to Callières and Courtin), a great designer needs délicatesse — a delicate sensibility, a heightened feeling for what might be awkward. (Think "The Princess and the Pea.") Modal dialogs, for example, have the potential to hamper or impede the user's flow. As a designer myself, I was long familiar with this principle, but it took me many years to appreciate it on a visceral, intuitive level. You could say that my sensibilities were insufficiently delicate to perceive the embarrassment, and as a result I proposed a number of unwittingly awkward designs.

Hospitality vs. bureaucracy

Hospitality is the height of manners, its logic fiercely guest-centered. Contrast a night at the Ritz with a trip to the DMV, whose logic is that of a bureaucracy, i.e., system-centered.

When designing an interface, clearly you should aim for hospitality. Treat your users like guests. Ask yourself, what would the Ritz Carlton do? Would the Ritz send an email from no-reply@example.com? No, it would be ashamed to — though the DMV, when it figures out how to send emails, will have no such compunction.

Hospitality is considerate. It's in the little touches. The most gracious hosts will remember and cater to their guests as individuals. They'll go above and beyond to anticipate needs and create wonderful experiences. But mostly they'll stay out of the way, working behind the scenes and minimizing guest effort.

Garry Tan calls our attention to a great example of interface hospitality: Chrome's tab-closing behavior. When you click the 'x' to close a tab, the remaining tabs shift or resize so that the next tab's 'x' is right underneath your cursor. This way you can close multiple tabs without moving your mouse. Once you get used to it, the behavior of other browsers starts to seem uncouth in comparison.

Breeding grounds

Western manners were cultivated in the courts of the great monarchs — a genealogy commemorated in our words courteous and courtesy.

It's not hard to see why. First, the courts were influential. Not only were courtly aristocrats more likely to be emulated by the rest of society, but they were also in a unique position to spread new inventions. Their travel patterns — coming to court for a time, then returning to the provinces (or to other courts) — made the aristocracy an ideal vector for pollinating Europe with its standards (as well as its fashions and STDs).

Second, court life was behaviorally demanding. Diplomacy is a subtle game played for high stakes. Mis-steps at court would thus have been costly, providing strong incentives to understand and develop the rules of good behavior. Courts were also dense, and density meant more people stepping on each other's toes (as well as more potential interactions to learn from). And the populations were relatively diverse, coming from many different backgrounds and cultures. Standards of behavior that worked at court would thus be more likely to work in many other contexts.

Behavior at table was under even greater civilizing pressures. Here there was (1) extreme density, people sitting literally elbow-to-elbow; (2) full visibility (everyone's behaviors were on full public display); and (3) the presence of food, which heightened feelings of disgust and délicatesse. A behavior, like spitting, might go unnoticed during an outdoor party, but not at dinner.

Finally, the people at court were rich. Money can't buy you love or happiness, but it makes high standards easier to maintain.

What can this teach us about the epidemiology of interface design?

Well if courts were the ideal breeding ground for manners, then popular consumer apps are the ideal breeding ground for UX standards, and for many of the same reasons. Popular apps are influential — they're seen by more people, and (because they're popular) they're more likely to be copied. They're also more likely to get a thorough public critique. They're 'dense', too, in the sense that they produce a lot of (human-computer) interactions, which designers can study, via A/B tests or otherwise, to refine their standards. They're also used by a wide variety of people (e.g. grandmas) in a wide variety of contexts, so an interface element that works for a million-user app is likely to work for a thousand-user app, but not necessarily the other way around.

So popular makes sense, but why consumer? You might think that enterprise software would be more demanding, UX-wise, since it costs more and people are using it for higher-stakes work — but then you'd be forgetting about the perversity of enterprise sales, specifically the disconnect between users and purchasers. A consumer who gets frustrated with a free iPhone app will switch to a competitor without batting an eyelash, but that just can't happen in the enterprise world. As a rule of thumb, the less patient your users, the better-behaved your app needs to be.

We also use consumer software in our social lives, where certain signaling dimensions — polish, luxury, conspicuous consumption — play a larger role than in our professional lives.

Thus we find the common pattern of slick consumer apps vs. rustic-but-functional enterprise apps. It's the difference between those raised in a diverse court or big city, vs. those isolated and brought up in small towns.

Manners on the frontier

Small towns may, in general, be less polished than the big city, but there's an important difference between the backwoods and the frontier — between the trailing and leading edges of civilization.

If people are moving into your town, it's going to be full of open-minded people looking to improve their lot. If people are moving away from your town, the evaporative-cooling effect means you'll be left a narrow-minded populace trying to protect its dwindling assets.

Call me an optimist, but I'll take the frontier.

Bitcoin and Big Data are two examples of frontier technology. They're new and exciting, and people are moving toward them. So they're slowly being subjected to civilizing pressures, but they're still nowhere near ready for polite society.

The Bitcoin getting started guide, for example, lists four "easy" steps, the first of which is "Inform yourself." (Having to read something should set off major alarm bells.) Step 2 involves some pretty crude software that will expose its private (implementation) parts to you: block chains, hashrates, etc. Step 3 involves a cumbersome financial transaction of dubious legality. Step 4: Profit?

Bottom line: it's a jungle out there.

Most users would be wise to steer clear of the frontier, which is understandably uncivilized, rough around the edges. But for an enterprising designer, the frontier is an opportunity — to tame the wilderness, spread civilization outward.

___

Further reading:

- Principles of hospitality (PDF of a chapter from Remarkable Service)

- Gricean politeness maxims (Wikipedia)

- Startups are frontier communities (Melting Asphalt)

- The Milo Criterion (Ribbonfarm)

Thanks to Diana Huang, Mills Baker, and James Somers for reading drafts of this essay.

Melting Asphalt

Melting Asphalt